The “IntellAct” project is developing a system that can learn through observing assembly tasks demonstrated by people and have robots reproduce these. Such an intelligent system can then be used to deploy industrial robots without conventional robot programming faster or in order to monitor and support crucial assembly tasks with robots, for instance on board the ISS.

In the process, from a technical expertise perspective a major challenge exists in combining the detailed signal level of manipulation (trajectories, forces and torques) with the abstract symbol level, whereby learning to master and “understand” the assembly situation is achieved. A concept was developed by the project team for this purpose, in order to interpret and abstract an observed human manipulation across a number of steps that are necessary for execution by robots without losing essential details.

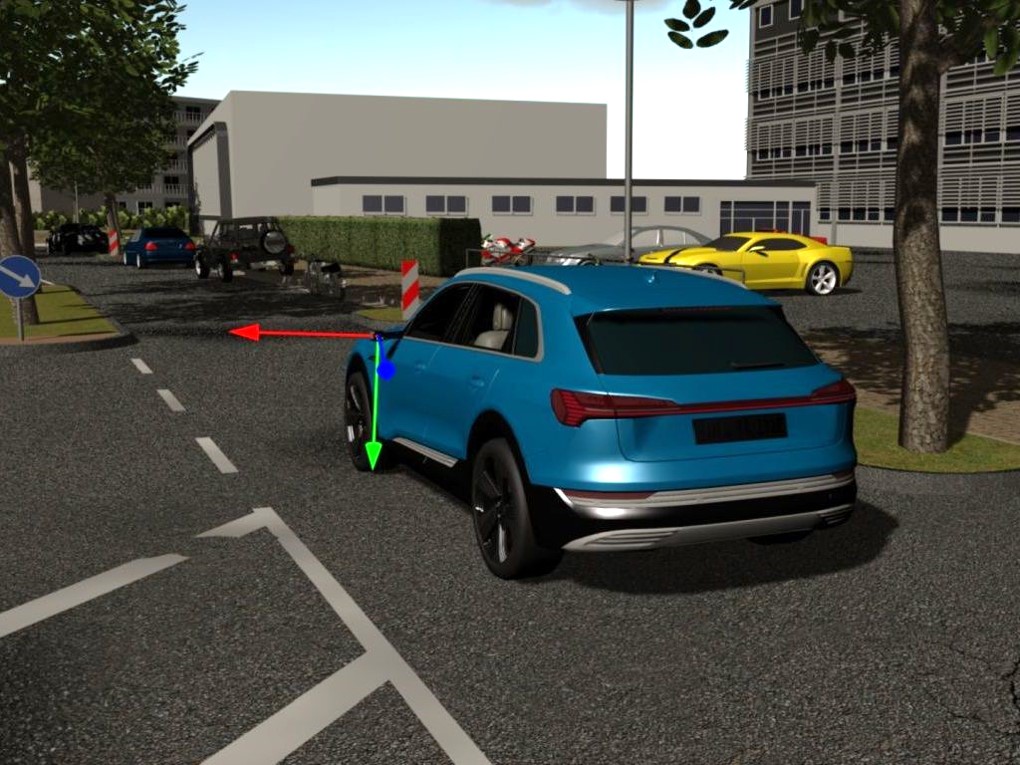

The contribution MMI makes within the context of “IntellAct” is to deploy a virtual test bed in which system components can be developed and trained in controlled ideal conditions. Thus training of sensor and learning algorithms takes place in virtual reality with the trainer carrying out assembly tasks with data gloves and in the process being able to set and test error factors such as sensor noise and faulty grips. The work of MMI in “IntellAct” hereby plays a particular role in contributing towards “high level” components already being developed at an early stage of a project, which can generally only start after selection, installation and commissioning of the sensor hardware.